Chubby

This post is based on the paper The Chubby lock service for loosely-coupled distributed systems.

Aim

Chubby is built to provide coarse-grained locking (a lock used to protect multiple resources together) as well as reliable low-volume storage for a loosely coupled distributed system. The purpose of the lock service is to allow its clients to synchronize its activities and has goals like reliability, availability to a moderately large set of clients. Chubby’ client interface is like a file system with read/write to files, advisory locks and providing notification to events (eg. file changes). Services within Google like GFS and Bigtable use Chubby to elect a master (leader election), store small metadata, etc.

Another alternative for providing leader election was to build a Paxos library(Note - 1) for developers to use rather than a centralized lock service. But there were some important advantages to using Chubby instead of a Paxos library (which is also provided).

- most projects don’t start off needing high availability, upon which replication and primary election is added. Using a lock service makes it easier to keep the existing program structure and communication patter.

- service that elect a primary need a mechanism to advertise results. This can be done with a name service but Chubby is well suited for this to reduce number of dependencies and the consistency it provides (also the client caching feature that it provides).

- A lock based interface is more common to developers and easier to use when provided by the lock service so that developers don’t have to think of machine failures etc.

- In a loose sense the function that the clients can perform even when a majority of the client nodes have failed as the Chubby may be operational.

On the choice of fine-grained vs coarse grained locking, they both have different requirements from the lock service. For eg. in coarse grained locking the lock is expected to be held for a long duration and locks are expected to survive lock server failures as the transfer of operations (eg. due to change in lock for master) from client to another may be more costly than the overhead to survive failures. In the other case it may be better to not maintain locks during failure as the time penalty to drop locks is not severe as they are held for short durations.

All the decisions of including certain features in Chubby were made to fit needs like allowing moderately high no of clients to interact with a Chubby file, notification mechanism to client when there is a change in service’s primary to avoid polling, consistent caching than other techniques and to avoid periodic polling and access control mechanisms.

System Structure

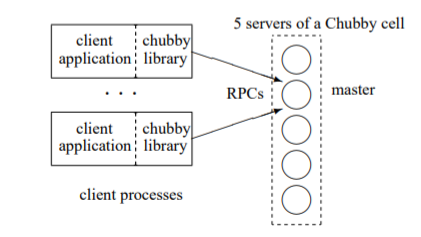

A client interacts with the Chubby cell (of 5 replica servers placed as to reduce the likelihood of failures eg. in different racks like GFS) using the Chubby client library through RPC calls. The replicas use distributed consensus to elect a master. The master is elected with a promise that replicas will not elect a different master for a “master lease” interval which is periodically renewed when the master continues to win a majority of the vote. All replicas maintain a replicated db (mentioned in the earlier post) to which only the master initiates reads/writes. During a master failure, the replicas run a new leader election when the lease expires. When a replica fails and does not recover a new machine is allocated (by an external system) and the lock server binary is run. The master would periodically poll the DNS to know replica changes and maintain a list. This replica would get the db state from other active replicas. Clients contact the service to get the master replica (either directly or given by a replica server) and initiate further actions.

File System Interface, Locks and Events

An example: /ls/foo/wombat/pouch where ls stands for lock service, foo is name of the Chubby cell -> resolve to one or more servers on DNS lookup. Each file contains a sequence of bytes. The design differs from UNIX in several ways. The name space consists of only files and directories collectively called nodes. Each node has one name within its cell (Chubby) i.e. node names are unique no symbolic or hard links. Nodes can be temporary or ephemeral (with these deleted when no client has them open) and any node can act as advisory reader/writer lock.

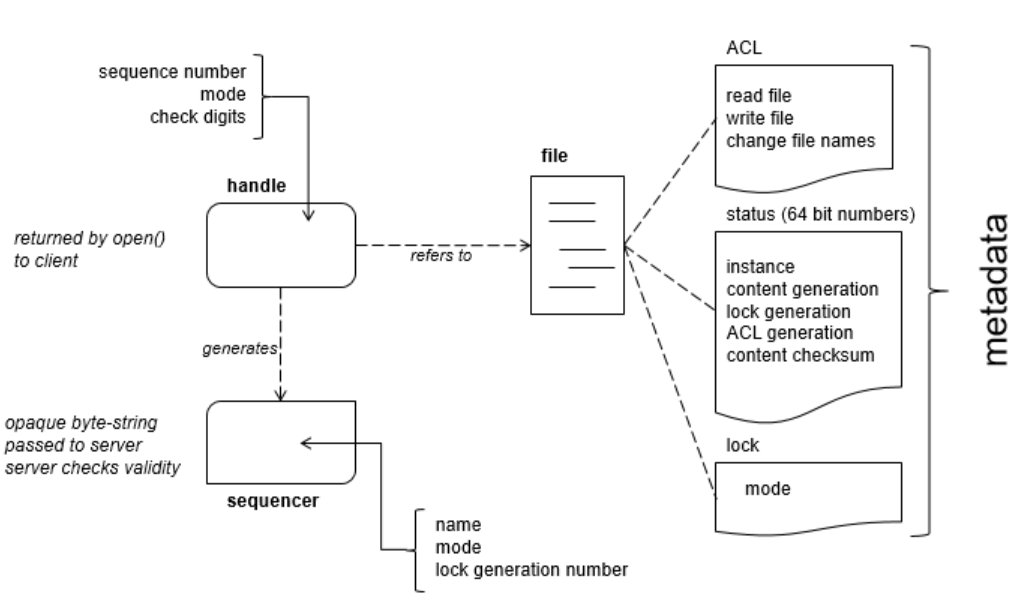

Metadata at nodes include three access control lists (ACL) used to control r/w and changing ACL names for nodes. A node inherits the ACL from parent with each ACL being a file itself part of the cell’s local namespace, eg. if file F has an write ACL named foo and the ACL file foo has an entry bar, the user bar is permitted to write. Other metadata includes four increasing 64-bit numbers for counters as to no of times locks where held (lock generation number), ACL changes, etc.

Client open nodes to obtain handles which include check digits(not clear how this works but may be related to this), sequence no. to tell master that it created the handle or previous master and mode information provided at open time to allow master to allow recreation of this state when new master takes over in case of failures.

Each Chubby file/directory can act as a reader-writer lock in shared on exclusive (only one holds the lock). Locks in Chubby are advisory like mutexes, they conflict with other attempts to acquire the same lock and unlike mandatory they don’t make locked objects inaccessible to clients. The reasons for having this type of lock are that Chubby locks are used to protect resources in other services and not just the file associated with the lock, access may be needed for debugging/administrative reasons, etc.

Locking in a distributed system is complex, eg. a client holding a lock may shut down just after its sends some operations to be performed (on the objects protected by the lock). Until the operations arrive (eg. at the servers/storage having the object) the lock may now be held by another client, thus the operations arriving may be executed without the lock protection and on potentially consistent data. Chubby provides use of sequence numbers for interactions that require use of locks. A client can request a sequencer (byte string containing name of the lock, mode (shared or exclusive), lock generation no) and pass it to application servers/services to perform operations (eg. pass it to a file system server along with the operation to write some data into file). The recipient server upon receiving the operation and sequencer need to check its validity and if it has the appropriate mode (slide 13 in this presentation was helpful to confirm what I understood). Also, client can specify a lock-delay: if the current client holding lock becomes inaccessible then the lock server will prevent clients from claiming the lock until the lock-delay, in other cases when the client releases a lock normally, it is available for other clients to claim.

Client can subscribe to a number of events like file content changes, node additions, chubby master failover, handle invalid, etc. and they will get notified after the event occurs.

An example of using the Chubby to perform primary election is as follows: all potential primaries attempt to open the lock file and attempt to acquire a lock, one succeeds to become the primary. The primary writes its identity to the lock file for other replicas to know and ideally requests a sequencer to pass to servers it communicates with.

Caching and Sessions

To reduce read traffic, client cache file data and metadata in an in-memory, consistent, write through cache that is maintained by a lease mechanism (has to refresh in this time). When the Chubby master receives any changes to the file or metadata, the modification is blocked until it receives a response (for a request to invalidate that it sends) from all clients that may have cached this (or waits for lease time to expire).

A chubby session is a relationship between the Chubby cell and the client during which the client’s handles, locks and cached data all remain valid. The client requests a new session on contacting Chubby and is terminated explicitly or if the session is idle (no open handles and calls for a minute). Each session has a lease: time until which the Chubby master guarantees not to terminate the session. The KeepAlive RPC (periodic handshakes) from the clients helps to extend this timeout. Master can also send any events or cache invalidation messages piggybacked on KeepAlive replies, this simplifies the client and allows the protocol to operate through firewalls (when connection initiation is present in only one direction).

The client maintains a local lease timeout that is a conservative approximation of the master’s lease timeout, when it expires the client becomes unsure if the masters lease has also expired and disables its cache and empties it (jeopardy phase). It waits for some grace period interval to see if it can successfully send the keepalive and extend the timeout, so it can enable the cache. This jeopardy event can be used by the application to slow down its operations. Any operations(except a few) on a handle fails when the lease expires.

Failover

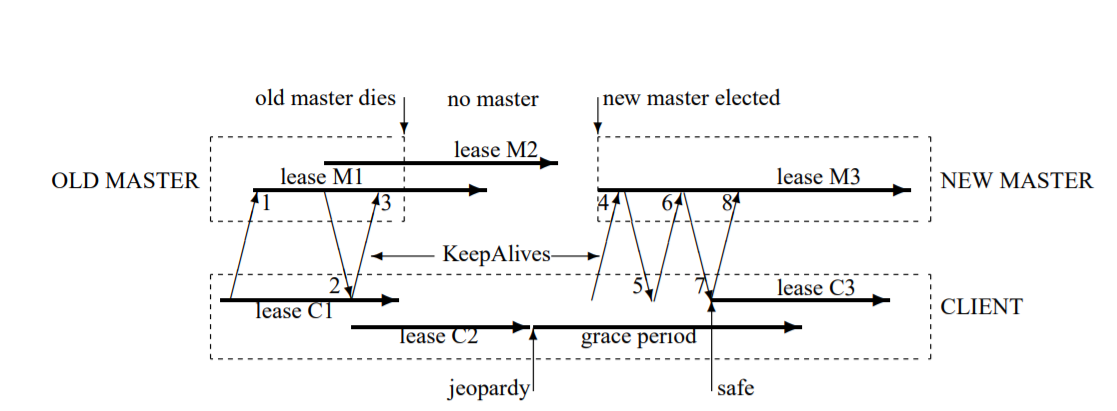

Master failure or losing membership results in it discarding its in-memory state about sessions, handles and locks. The timer of active sessions is considered to paused when this happens so that if a new master election occurs quickly the client can contact the new master to extend lease. If the election takes time and the clients local lease expires, it comes into jeopardy and waits for the grace period allowing for the session to be maintained until then (but the cache is cleared and disabled).

The figure above shows the events in a long master fail-over event and the client using its grace period to preserve its session. The message 1 is a keepalive to the master (after which the master waits for sometime ideally until the current lease M1 is close to expiring). The master commits to the new extended lease M2 before responding and the client on receiving the response switches to a new local conservative lease C2. During the failover, C2 expires and the client is in the jeopardy phase. After the new master election the client’s keepalive will come to the new master.

When a new master starts it picks up a new client epoch number (which clients pass on every call). Requests from clients with older epoch nos are rejected and provided with the new epoch no. as a reply. The master does not start performing session related requests and builds in memory data structure for session and locks using db state, sessions leases are extended to the maximum that previous master have been using. The master now lets clients keepalives messages (but no other session related op) and as a response it emits a failover event to each session causing clients to flush caches (if they haven’t already). Master waits until each session acknowledges the failover event or waits till the session expires. Now the master can process with all operations. When clients send request wrt to a handle created prior the failure(detected from the sequence no. in the handle), the new master uses this to recreate the in-memory representation of the handle and performs the request. If this handle is closed its recorded (as to prevent any other client not to recreate if their request is delayed) and deleting ephemeral files with no handles are done.

So in the example, the clients keepalive request to the master, 4, to extend the session is first rejected due to wrong epoch no. The request 6 also does not extend the lease as M3 (set by the new master to maximum) but the reply will tell the client it can set a new local lease C3 and continue any other operations. The new master and client coordinate to provide an illusion to the application that no failure has occurred. If the grace period had been expired before the client would have reported error to the application.

Miscellaneous

This includes a list of other features, optimization and problems in Chubby mentioned in the paper

- Chubby backups the db snapshot to a GFS file server in a different building.

- Chubby allows mirroring of a collection of files between cells, it can also be used to copy configuration files among cluster around the world.

- Chubby master needs to handle 90K+ clients and may scaling mechanisms:

- increasing timeouts for lesser KeepAlive RPCs, many chubby cells per datacenter

- Proxies: the protocol can be proxied to reduce the server load by handling keepalive (which is majority of the traffic) and read requests (10% of the total traffic) through the proxy cache.

- Partitioning: Chubby cell of N partitions; each partition having its own master and replicas and nodes would be hashed to a particular partition.

- Chubby’s client code is complex and a non-trivial client side library so to avoid maintaining it for different languages, they use a protocol conversion server that export a simple RPC protocol.

- Chubby is used as a name service at Google because it uses explicit cache invalidations (and the cache is maintained constant rat keepalive) by rather than a TTL used in a DNS cache to expire records (and potentially leading to many DNS lookups). They have a protocol conversion server to only work with client using DNS feature (as some tradeoff can be made here to decrease load).

- In the new deign, sessions are not stored in db but recreated after failover as handles are, when the clients contact the new master.

- Abusive clients: tried to have review with team using Chubby to note the usage. Lack of aggressive caching: when developers write loops that retry infinitely when a file is not present or poll and not using caching overloaded the master. Lack of storage quotas: Chubby was not a storage system and did not have a concept of quotas, and when application started storing data which grew very large over time this became an issue. So a file size limit of 256KB was introduced.

- Lessons learned: developers rarely consider availability and that Chubby also may be unavailable for some duration, poor choice of API provided have had unexpected affects, transport protocol for RPCs can cause issues with TCP’s backoff for congestion not considering application level timeouts and UDP having no congestion avoidance.

Note

(1) Paxos as mentioned in earlier posts was used by a group of nodes to achieved consensus or agree upon a single value. For a leader election think of the values being node ids and the Paxos algorithm is run to select one node id which will act as the leader.

- Please let me know if I misinterpreted or missed something.